The Real Lolita

In her new book, Sarah Weinman unearths the forgotten kidnapping case behind Vladimir Nabokov’s literary sensation.

In her new book, Sarah Weinman unearths the forgotten kidnapping case behind Vladimir Nabokov’s literary sensation.

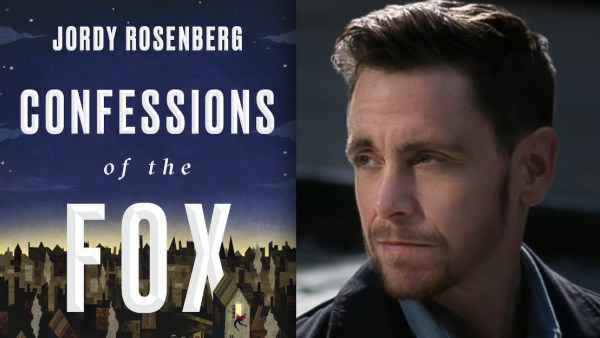

The author of the groundbreaking new novel “Confessions of the Fox” talks about creating a transgender hero in 18th-century London.

A new book unveils how two giants of their fields — a master of science fiction and a visionary of…

The Nobel Prize-winning writer turns in a tale of scandal and murder set against the backdrop of a corrupt Peruvian…

In the new novel from the author of “Cold Mountain,” the cataclysm of the Civil War is seen through a…

The new novel from the author of “The Interestings” traces a young woman’s life after it’s reshaped by a vital…

Recreating a legendary expedition through Canada’s vast northern wilds brings the writer face to face with our permanent effect on…

The author of “The Line of Beauty” traces a mystery across three generations of gay life in Britain.

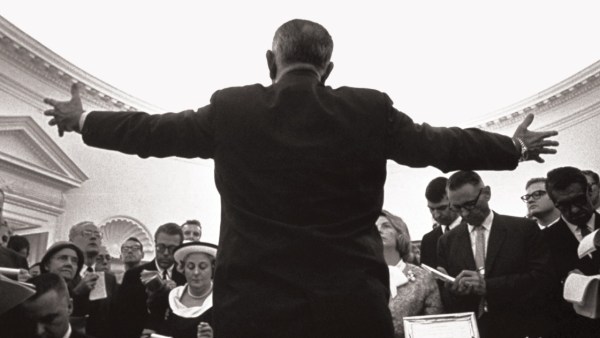

A new book about LBJ takes on a presidency of Shakespearean dimensions.

In her most unsettling works of fiction, Barbara Comyns took fairy tales in strange and revealing new directions.

The late writer William Gass is remembered by many for his monumental, groundbreaking novels. David L. Ulin argues for the…

A poet and music critic’s boundary-smashing new collection proves to be one of the year’s most vital documents on an…

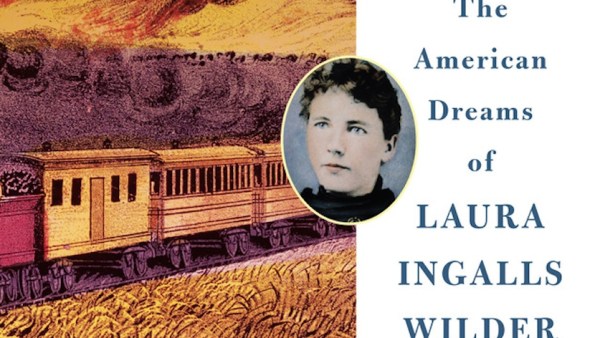

The history behind the beloved Little House books was one of endurance and suffering — and family myth and infighting…

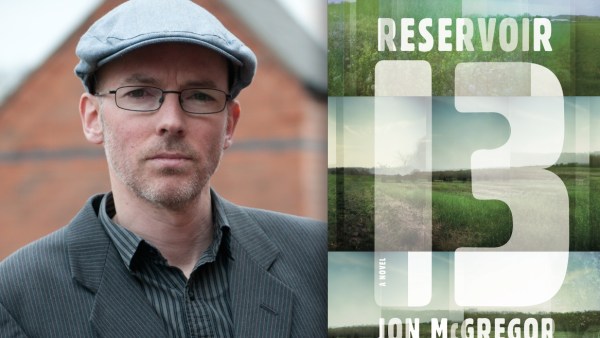

Award-winning novelist Maile Meloy talks with Jon McGregory on his powerful new story of a girl’s disappearance, and the aftermath.

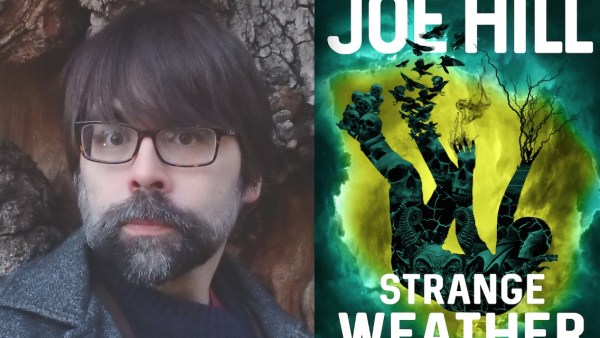

Just in time for Halloween, the bestselling author of “Strange Weather: Four Short Novels” shares his list of prescient favorite…

The creator of the world’s most mysterious work of art was himself an open book. Michael O’Donnell reviews Walter Isaacson’s…

The author of the new memoir “Happiness” talks with Bret Anthony Johnston about her journey along a “crooked little road…

One of the foremost chroniclers of the Putin era dives deep into how the trauma of the Soviet era still…

The master of the Cold War spy thriller returns to the aftermath of one of his classic works, and to…

In the wake of Christopher Nolan’s film version of the iconic rescue of British forces comes Michael Korda’s account. Review…